My Python Documentation Toolchain

I tried to write a short technical article and it took me 6 weeks

In my day job I lead a Data Team that works in data engineering, analytics, and data science. As part of our work we’ve integrated our data warehouse with a growing set of services we’ve written in Python. As we’ve built these tools I’ve been pushing our team away from one-off scripts and notebooks toward structured Python packages with version control, tests, and documentation. This article focuses on our documentation system. I’m going to cover the tools and design decisions that allow all of our projects have live auto-updating documentation webpages — and why we bothered.

Our documentation strategy is structured around concentrating as much content as possible into Python docstrings. We standardize our docstrings using the Google Python Style Guide and enforce those conventions using a Flake8 plugin called darglint. We remind everyone to keep docstring content relevant by using GitLab merge templates. From those docstrings we then build static HTML pages using Sphinx and deploy these pages within our organization using GitLab Pages. The end result is readable up-to-date online documentation. The most import result of this tool chain is not a set of perfect documentation (we’re a long way from that) but instead a framework that we can use to improve our documentation and build a shared understanding of our projects.

This article focuses on what we built and why we chose to build it. I’m not going to spend much time on how we set up our tools because most of the projects I’m going to mention are well-documented elsewhere. That being said, if you get stuck or have a question feel free to leave a comment of find me on Twitter (@AlexVianaPro).

Let’s start with why we bothered to build a documentation system in the first place instead of just taking ad-hoc notes.

Why The Fuss About Documentation?

I think most people who write software would agree that projects should have at least some documentation and that major projects, like Pandas, should have exhaustive documentation. But for an internal medium-sized project with only a few users isn’t just a README file, sane variable names, and a few comment strings more than enough? More to the point, real projects have deadlines. Wouldn’t developer effort be better spent getting code out the door and not on comprehensive documentation?

In my opinion, for code to be truly high-quality it needs to be maintainable. Solid design principles can make it easier to understand what your code is doing. Tests can ensure your code is behaving as you intended. But documentation helps you understand why you are doing what you are doing. It adds context, and data work in particular is heavily contextual relying on nuanced understandings of upstream and downstream data flows.

While cutting corners on documentation is a pragmatic approach, and certainly something I’ve done myself, I don’t think it works well in the long run. In my experience it’s just another form of technical debt. In the extreme but not uncommon case of a single project maintainer years of system knowledge can be lost when that individual leaves the organization.

These are well-known arguments but I want to push this idea of the value of documentation beyond just creating maintainable code. In a modern data team it’s common to have folks that are new to writing software in the form of a project and not just one-off scripts or notebooks. This is a transition I can’t encourage enough but one that can be initially very confusing for the practitioner. Forcing these team members to slow down, think about what they’re doing, and be explicit about their intentions and understanding in the documentation, is beneficial not only for code quality but also for their own professional development.

Let me close this section by saying that I’ve never met a technical team, mine included, that was satisfied with their documentation — or their testing for that matter. But if you can agree as an organization that documentation is a critical part of delivering long-term value then you can at least strive to make sure your documentation is constantly improving. But to do that at scale you need a solid repeatable structure, and for that you need tools.

Why So Many Tools?

Even if you buy my argument about documentation being important, why do we need so many tools? Can’t you just … keep the documentation up-to-date? My argument is that setting up a toolchain for your documentation gives your project structure and a direction, just like with a testing or a deployment system. As long as you have structure and direction you can iterate and evolve. Without it, you’re lost and “documentation” will be scattered throughout your project, often completely outdated and irrelevant.

I see more parallels here between documentation and testing. One of the main arguments in favor of testing is that beyond the immediate value of tests as code validation is that tests also encourage people to write code that is testable by design. That is, code is intended to be intelligible and verifiable and which is overall better code. I think the same thing happens with documentation. When documentation becomes part of the structure in your projects, people will be inherently concerned with communicating all the ideas surrounding their code and their work will start to become more documentable (and intelligible) by design.

But that structure needs to start from the ground up.

Documentation with Python Docstrings

Python has the concept of a docstring, which is a multiline string at the start of a module, function, class, or method. If you’re not familiar it looks like this:

def raise_to_power(base, exponent):

"""This is a docstring"""

# This is a comment

return base ** exponent Like their name says, docstrings are intended to hold documentation. This might seem like a trivial feature at first; after all, we can also put important information in the README or comments. So why should we use docstrings?

I’ve found that you get the most out of tools when you lean into their opinionated design decisions. Python as a language has made an intentional decision that documentation belongs in docstrings and as a result tools and best practices have been built up around this assumption. Aligning to these conventions means you can take advantage of all these additional tools. For example, if you load the previous function into a Python REPL you’ll find it already knows what to do with the docstring:

>>> help(raise_to_power)

Help on function raise_to_power in module __main__:

raise_to_power()

This is a docstringYou can get the same response with the ? operator in IPython or a Jupyter notebook. This is just a hint at what we can do with information once it’s collected into docstrings. But, to do more we need to standardize our docstrings so other tools can make use of them.

Docstring Guidelines with the Google Style Guide

There are a couple of different conventions for Python docstrings but I strongly prefer the conventions from the Google Python Style Guide. I’m a big fan of the Google syntax because it strikes a balance between human and machine readable; similar in my opinion to Markdown.

We’ll leverage the fact that this format is machine readable when we start building webpages for our documentation but first let’s look at a sample. Here’s the same function we just saw but now with a Google style docstring:

def raise_to_power(base, exponent):

"""

Raise a base to an exponent, i.e. base ** exponent.

Args:

base (int): The base value

exponent (int): The exponent value

Returns:

int: The output of the calculation

"""

return base ** exponentI think most people will agree that this is readable but some people might question if simple functions should be documented this extensively. Have we really added anything to our project in this case? I would argue yes.

First of all, never overestimate the extent to which you have made assumptions about the context and clarity of intent in your code. In the case of our example, I would want this docstring to explain why we are wrapping functionality that already exists in the Python standard library into a function. There’s a useful side effect of this, forcing myself to write out assumptions in docstrings has become a form of rubber duck debugging for me that has frequently caused me to change my mind about a particular implementation.

Second, as a practical matter, I already have enough decisions to make in writing my code. Having no input on when and how I add docstrings actually frees me to focus on the decisions that do matter. And as we’re about to see, having a uniform documentation standard not only frees me to think about what matters, it allows us to leverage automation.

Automation with darglint, Flake8, and Merge Templates

One of the pain points about maintaining docstrings is that it’s hard to keep them up-to-date. One of the reasons I like to keep documentation right in the code is that it make it easier to remember to update both. But having to remember something is always an unreliable plan. Fortunately, we can lean on some automation to help.

We use GitLab CI/CD in the cloud and Makefiles locally for all our code quality checks including testing and linting (though I have my eye on migrating the later to the Python pre-commit tool). For linting, we first run auto-formatting with the Black code formatter and then do a pass with Flake8. To make sure our docstrings are accurate we use a tool call darglint which can run as a Flake8 extension. Darglint (“document argument linter”) checks to make sure that your function call signature and docstrings match. If you change one without updating the other Flake8 will fail.

It’s worth taking just a moment to talk about tool selection here. Darglint is a small project that seems pretty stable. Nonetheless, it’s much earlier in its development that anything else in our projects and I’ve been burnt before by teammates including trendy new tools into our code, only to find a few years later that we have a critical dependency on a seemingly abandoned piece of technology.

A key consideration for me when evaluating an architecture or tool is making sure I have a plan, or at least the ability, to migrate off of it. In this case, if we had to rip out darglint tomorrow, we would loose the ability to lint our docstrings which would only be an annoyance at worst and only require changing a few lines in a some config files. I decided this was worth the risk to help maintain our docstrings.

Another way we keep the docs up-to-date is to use GitLab merge templates which includes the ability to create a blocking markdown checkbox for the developer to confirm they’ve updated the docs. This is a helpful nudge but it doesn’t help if you forget what you changed or don’t realize the scope of the impact of your changes. Ultimately, I don’ think there is a solution for that problem. But I do think making high-impact docs that people want to use will help you find the inevitable documentation problems sooner.

So let’s make our docs high-impact by building some webpages from our docstrings.

Documentation Webpages with Sphinx

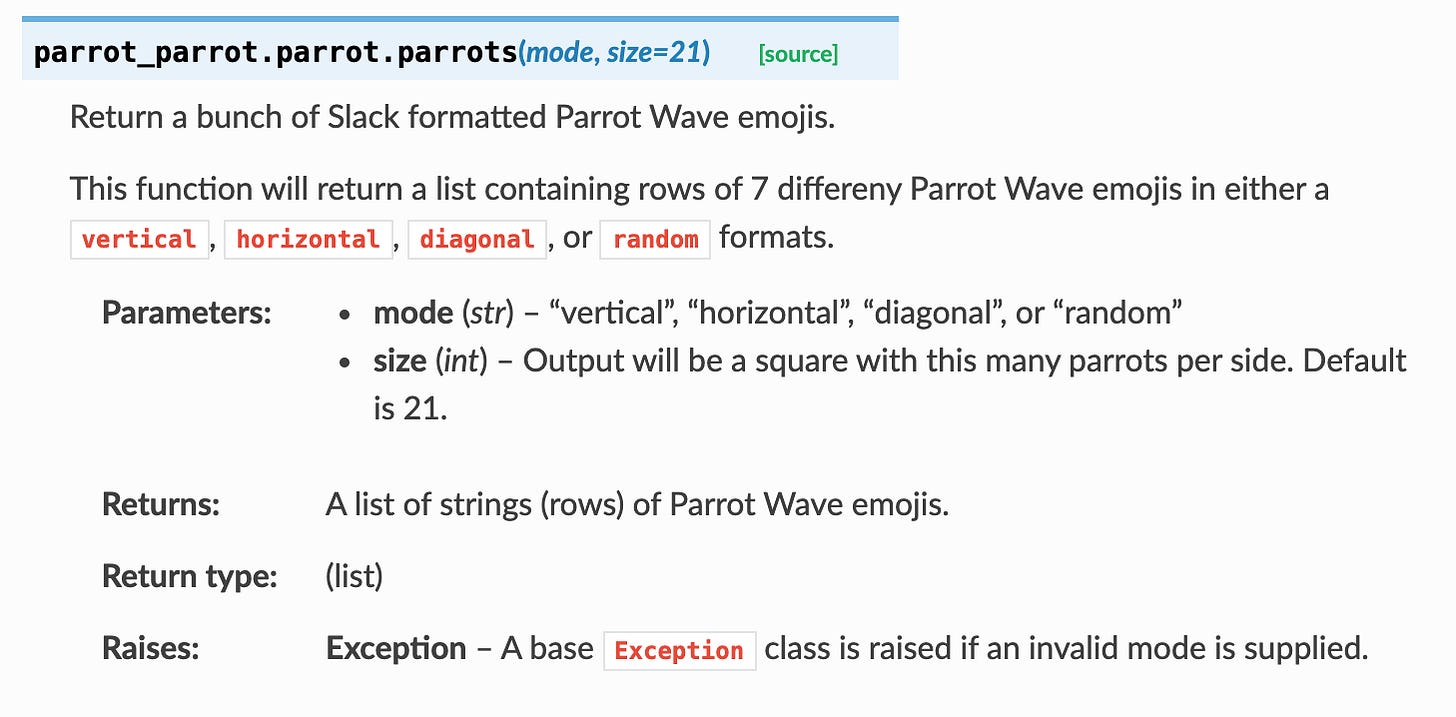

At this point in the process if we’ve written our docstrings with even a moderate amount of care we now have an important body of knowledge about how our code works. And because we’ve written them using strict standards we can now export our documentation into webpages. To do this we use a popular Python library called Sphinx which generates static HTML pages. Sphinx was developed to create the documentation for the Python core library so the formatting out-of-the-box looks pretty similar to the historical python.org docs.

You can build a perfectly usable documentation page using just vanilla Sphinx; but with a few plug-ins we can make a much nicer site with much less work. First, we add the autodoc extension which will automatically find and collect all the docstrings in our package. Then we add the viewcode extension which will allow us to click through to the raw source code within the doc pages themselves. The napoleon extension allows Sphinx to parse our Google style docstrings as opposed to Sphinx’s default reStructured text format. Finally, and this is just personal preference, we can set our HTML theme to the ReadTheDocs theme.

Like I said, this is not intended to be a tutorial so I’ve skipped a few steps such as setting up your conf.py file, your base index.rst file, and making sure your package is installable and not just a script. Fortunately, there are plenty of resources on these steps. Setting this stuff up is honestly a pain, but you only have to do it once and after that it’s pretty stable. And once you’ve figured it our for one project it’s easy to repeat for all your projects.

So now that we have a nice looking document on our local machine, how do we publish it? After all, telling someone to RTFM just doesn’t have the same bite to it when they have to go build the docs from source on their local machine.

Going Live with GitLab Pages

We use GitLab as our version control platform which has a Pages feature that allows you to host static webpages from pipeline build artifacts (GitHub has similar functionality). The GitLab examples include a Python + Sphinx example that you can pretty much just cut and paste and into your CI/CD and you’ve now got live automatically documenting project.

Nice work!

Closing Thoughts

It’s worth pointing out that something interesting happens at this point. When docs are published and automatically updated I’ve noticed they become a lot more real to teams. People are proud of them and want to keep the up-to-date. Questions get directed to the docs, the docs get updated to be more helpful, and you start to get a feedback loop.

There were a number of other directions I wanted to take this article as I was writing it including building a documentation ecosystem by connecting the other self-documenting services in our cloud warehouse such as Looker and DBT. Or how by following the example of the FastAPI project data pipelines could use Python type hints to build even more automatic documentation. However, I eventually realized these topics were way too much for a single article so I split them out into drafts for future articles. I’m quickly (re)learning that in writing just like in programming, scoping and structure are everything.

Thanks for reading!

Odds and Ends

Around the Internet:

Overdiagnosis (The Limits of Inference) - If you love data and philosophy you should check out my friend Clare’s substack. Her last article in particular on false positives and what they mean for widespread COVID testing is an excellent reminder of the importance of core statistical principles.

Currently Listening:

Portrait of Wes (Wes Montgomery Trio): A friend of mine recently turned me on to under-appreciated Indiana jazz guitar player Wes Montgomery. Opting for Melvin Rhyne on organ instead of a bass player, his trios have an especially interesting sound. Check out the up-tempo cover of Art Blakey’s “Moanin’”

Currently Reading:

Death’s End / Three-Body Problem Trilogy: Just finished up this series. If you like your science fiction to take on a truly cosmological scale, are pretty comfortable with plot devices that rely heavily on physics concepts like the speed of light or the strong atomic force, and can handle characters that act more like platonic ideas of humans than actual people - then this is the trilogy for you. It’s also interesting to think about how the author’s Chinese cultural perspective comes through in his writing. If that last bit seems interesting I’d recommend China in Ten Words.

Nature’s Metropolis: Absolutely blown away by this economic and business history of Chicago - I see why it was a Pulitzer Prize finalist. For example the chapter on grain could not sound more boring and yet ends up being an amazing primer for all the current stock market upheaval around GameStop.

Thanks for reading! You can catch me on twitter at @AlexVianaPro, on GitHub, or on LinkedIn. If you want to subscribe there’s probably a button on this page to do that. Thanks to my wonderful wife for helping with my innumerable typos.